Type-safe actor messaging approaches

For notify.me I hand-rolled a simple actor system to handle all Xmpp traffic. Every user in the system has its own actor that maintains their xmpp state, tracking online status, resources, resource capability, notification queues and command capabilities. When a message comes in either via our internal notification queues or from the user, a simple dispatcher sends the message on to the actor which handles the message and responds via a message that the dispatcher either hands off to the Xmpp bot for formatting and delivery to the client or sends it to our internal queues for propagation to other parts of the notify.me system.

This has worked flawlessly for over 2 years now, but its ad-hoc nature means it's a fairly high touch system in terms of extensibility. This has led me down building a more general actor system. Originally Xmpp was our backbone transport among actors in the notify.me system, but at this point, I would like to use Xmpp only as an edge transport, and otherwise use in-process mailboxes and serialize via protobuf for remote actors. I still love the Xmpp model for distributing work, since nodes can just come up anywhere, sign into a chatroom and report for work. You get broadcast, online monitoring, point-to-point messaging, etc. all for free. But it means all messages go across the xmpp backbone, which has a bit of overhead and with thousands of actors, i'd rather stay in process when possible. No point going out to the xmpp server and back just to talk to the actor next to you. I will likely still use Xmpp for Actor Host nodes to discover each other, but the actual inter-node communication will be direct Http-RPC (no, it's not RESTful, if it's just messaging).

Definining the messaging contract as an Interface

One design approach I'm currently playing with is using actors that expose their contract via an interface. Keeping the share-nothing philosophy of traditional actors, you still won't have a reference to an actor, but since you know its type, you know exactly what capabilities it has. That means rather than having a single receive point on the actor and making it responsible for routing the message internally based on message type (a capability that lends itself better to composition), messages can arrive directly at their endpoints by signature. Another benefit is that testing the actor behavior is separate from its routing rules.

public interface IXmppAgent {

void Notify(string subject, string body);

OnlineStatus QueryStatus();

}

Given this contract we could just proxy the calls. So our mailbox could have a proxy factory like this:

allowing us to send messages like this:

var proxy = _mailbox.For<IXmppAgent>("foo@bar.com");

proxy.Notify("hey", "how'd you like that?");

var status = proxy.QueryStatus();

But messaging is supposed to be asynchronous

While this is simple and decoupled, it is implictly synchronous. Sure .Notify could be considered a fire-and-forget message, .QueryStatus definitely blocks. And if we wanted to communicate an error condition like not finding the recipient, we'd have to do it as an exception, moving errors into the synchronous pipeline as well. In order to retain the flexibility of a pure message architecture, we need a result handle that let's us handle results and/or errors via continuation.

My first pass at an API for this resulted in this calling convention:

public interface IMailbox {

void Send<TRecipient>(string id, Expression<Action<TRecipient>> message);

Result SendAndReceive<TRecipient>(string id, Expression<Action<TRecipient>> message);

Result<TResponse> SendAndReceive<TRecipient, TResponse>(

string id,

Expression<Func<TRecipient, TResponse>> message

);

}

transforming the messaging code to this:

_mailbox.Send<IXmppAgent>("foo@bar.com",a => a.Notify("hey", "how'd you like that?"));

var result = _mailbox.SendAndReceive<IXmppAgent, OnlineStatus>(

"foo@bar.com",

a => a.QueryStatus()

);

I'm using MindTouch Dream's Result<T> class here, instead of Task<T>, primarily because it's battle tested and I have not properly tested Task under mono yet, which is where this code has to run. In this API, .Send is meant for fire-and-forget style messaging while .SendAndReceive provides a result handle -- and if Void were an actual Type, we could have dispensed with the overload. The result handle has the benefit of letting us choose how we want to deal with the asynchronous response. We could simply block:

var status = _mailbox.SendAndReceive<IXmppAgent, OnlineStatus>(

"foo@bar.com",

a => a.QueryStatus())

.Wait();

Console.WriteLine("foo@bar.com status:", status);

or we could attach a continuation to handle it out of band of the current execution flow:

_mailbox.SendAndReceive<IXmppAgent, OnlineStatus>(

"foo@bar.com",

a => a.QueryStatus()

)

.WhenDone(r => {

var status = r.Value;

Console.WriteLine("foo@bar.com status:", status);

});

or we could simply suspend our current execution flow, by invoking it from a coroutine:

var status = OnlineStatus.Offline;

yield return _mailbox.SendAndReceive<IXmppAgent, OnlineStatus>(

"foo@bar.com",

a => a.QueryStatus()

)

.Set(x => status = x);

Console.WriteLine("foo@bar.com status:", status);

Regardless of completion strategy, we have decoupled the handling of the result and error conditions from the message recipient's behavior, which is the true goal of the message passing decoupling of the actor system.

Improving usability

Looking at the signatures there are two things we can still improve:

- If we send a lot of messages to the same recipient, the syntax is a bit repetitive and verbose

- Because we need to specify the recipient type, we also have to specify the return value type, even though it should be inferable

We can address both of these, by providing a factory method for a typed mailbox:

public interface IMailbox {

IMailbox<TRecipient> To<TRecipient>(string id);

}

public interface IMailbox<TRecipient> {

void Send(Expression<Action<TRecipient>> message);

Result SendAndReceive<TResponse>(Expression<Action<TRecipient>> message);

Result<TResponse> SendAndReceive<TResponse>(

Expression<Func<TRecipient, TResponse>> message

);

}

which let's us change our messaging to:

var actorMailbox = _mailbox.To<IXmppAgent>("foo@bar.com");

actorMailbox.Send(a => a.Notify("hey", "how'd you like that?"));

var result2 = actorMailbox.SendAndReceive(a => a.QueryStatus());

// or inline

_mailbox.To<IXmppAgent>("foo@bar.com")

.Send(a => a.Notify("hey", "how'd you like that?"));

var result3 = _mailbox.To<IXmppAgent>("foo@bar.com")

.SendAndReceive(a => a.QueryStatus());

I've included the inline version because it is still more compact than the explicit version, since it can infer the result type.

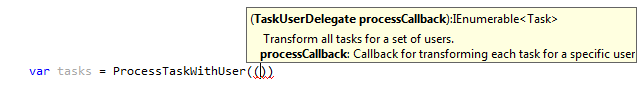

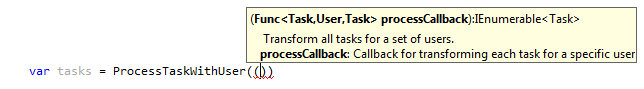

Supporting Remote Actors

The reason the mailbox uses Expression instead of raw Action and Func is that at any point an actor we're sending a message to could be remote. The moment we cross process boundaries, we need to serialize the message. That means we need to be able to programatically inspect the inspection, and build a serializable AST as well as serialize the captured data members used in the expression.

Since we're talking serializing, inspecting the expression also allows us to verify that all members are immutable. For value types, this is easy enough, but DTOs would need be prevented from changing so that local vs. remote invocation won't end up with different result just because the sender changed it's copy. We could handle this via serialization at message send time, although this looks like a perfect place to see how well the Freezable pattern works.